Are you tired of AI usage limits? Are you concerned about your privacy when sending data to cloud-based tools like ChatGPT? Or perhaps you need to use AI while traveling, even without an internet connection?

If any of these concerns sound familiar, it's time to start running AI locally.

In this post, we'll explain the difference between running AI locally versus using cloud-based apps like ChatGPT, cover the pros and cons, and show you how to get started with easy-to-use local AI apps.

The most common ways to use AI are with online tools such as ChatGPT, Claude, and Gemini. Here is how these cloud-based tools operate:

• You send a request from your computer over the internet.

• The request goes to the AI company's servers, where it is processed, and a response is generated.

• The response is then sent back to you, and displayed in your browser.

In this setup, your machine is only sending messages and receiving replies; it is not actually running the AI itself.

When you run locally, the actual AI model is stored on your device, and your requests never leave your machine.

To achieve this, you need to download an AI model to your computer along with specialized software to run it.

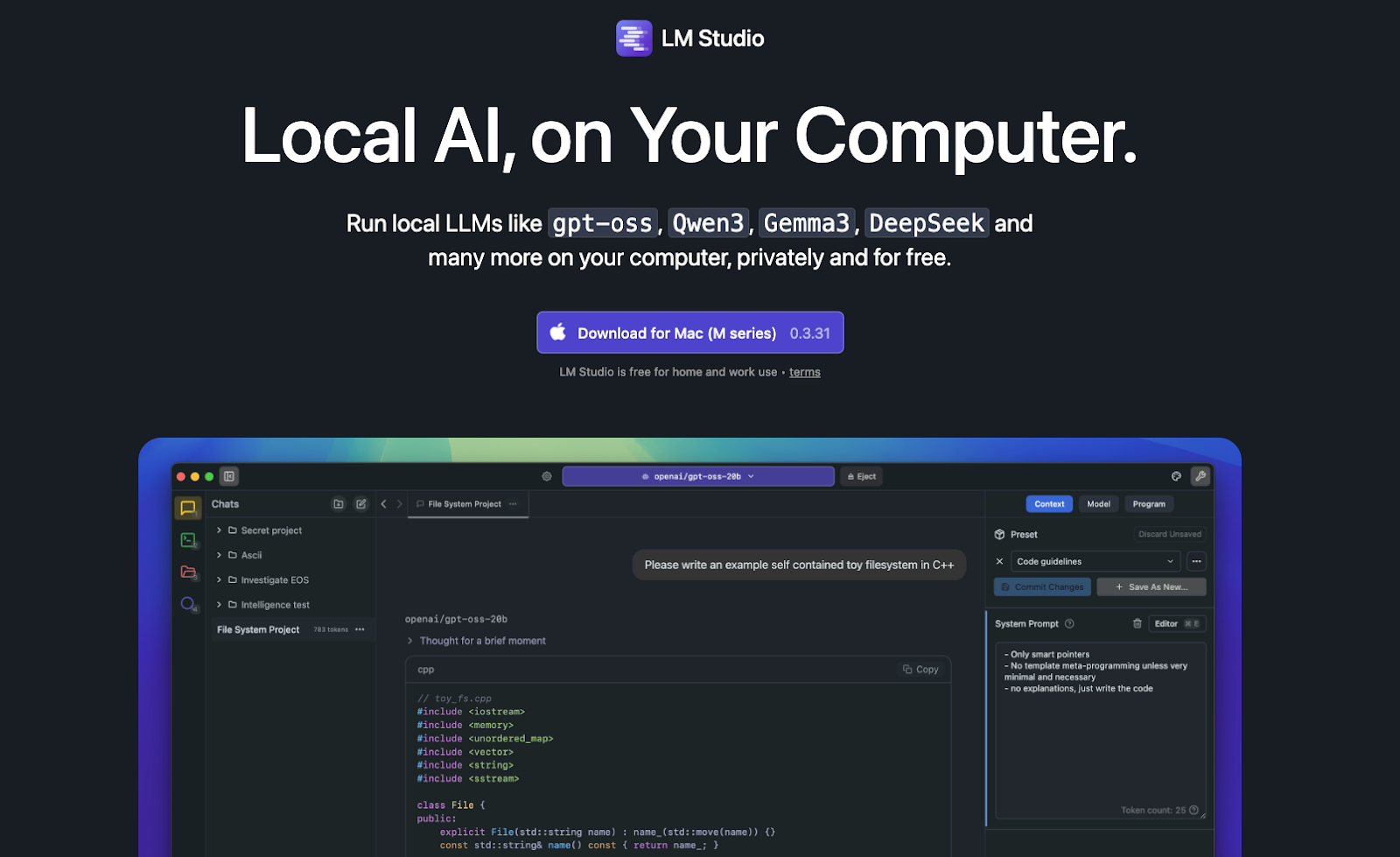

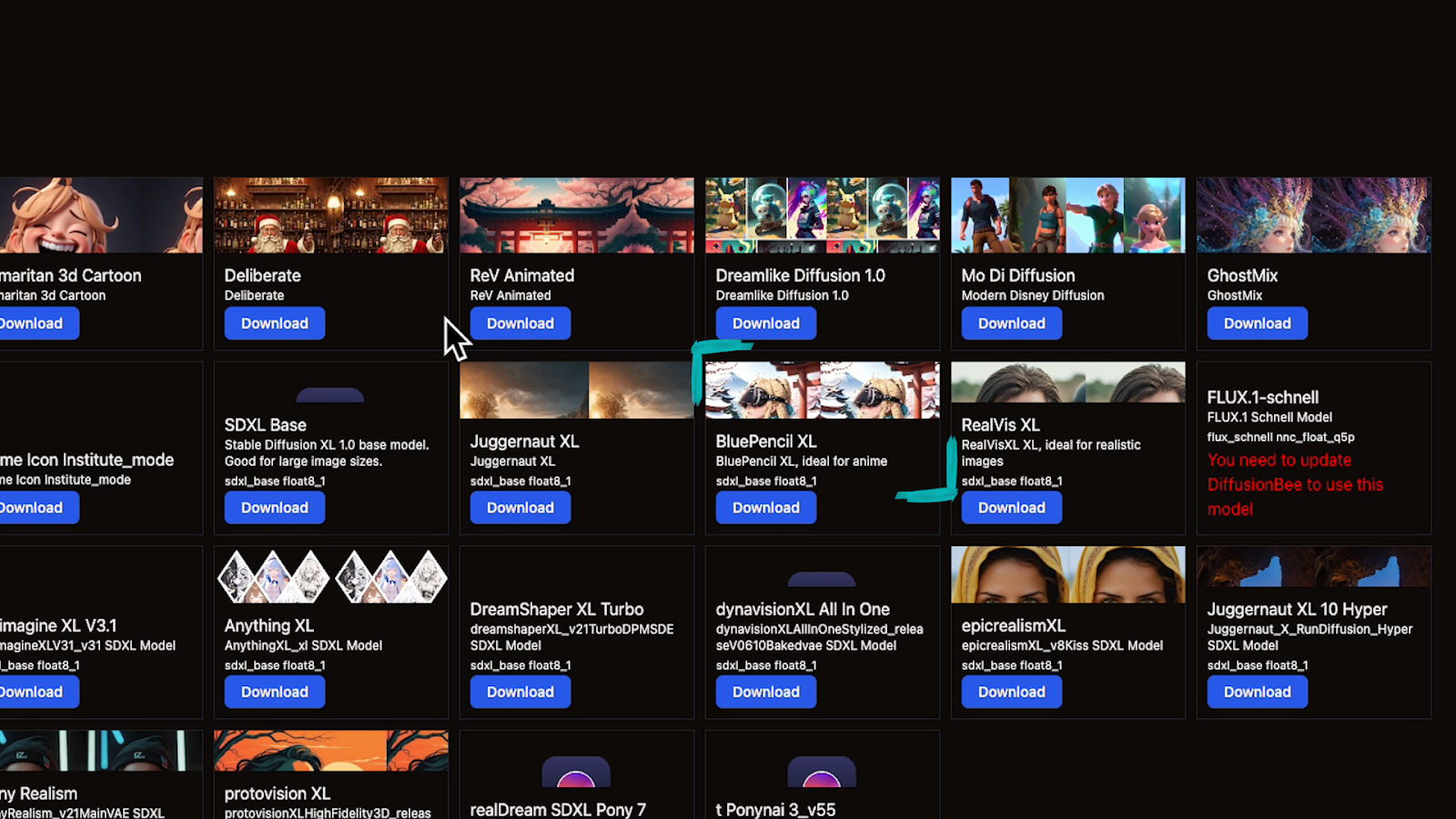

Examples of this software include LM Studio, DiffusionBee, and Comfy. While these apps offer a limited selection of models you can download directly, a much larger repository of models can be found on platforms like Hugging Face.

There are five key advantages to running AI locally:

1. No Usage Limits: Cloud apps like ChatGPT often limit your daily responses, especially during heavy usage. When running locally, you can send as many prompts as you want because you are using your own computer and resources.

2. It's Free: You don't need a subscription to run AI on your own machine. Apps like LM Studio, DiffusionBee, and Comfy are all free.

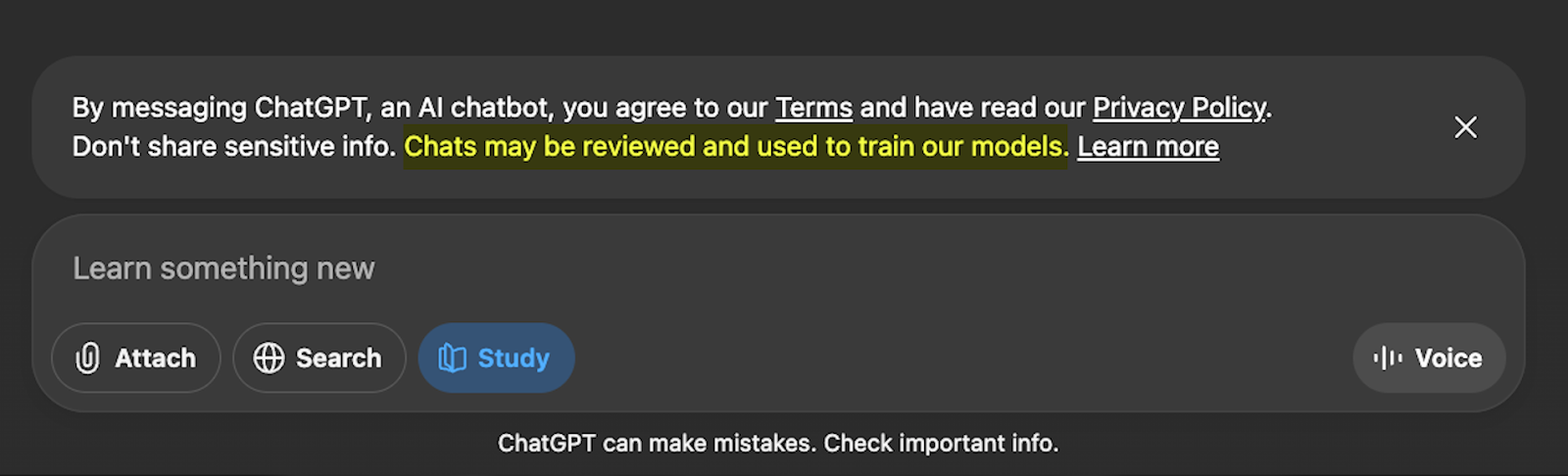

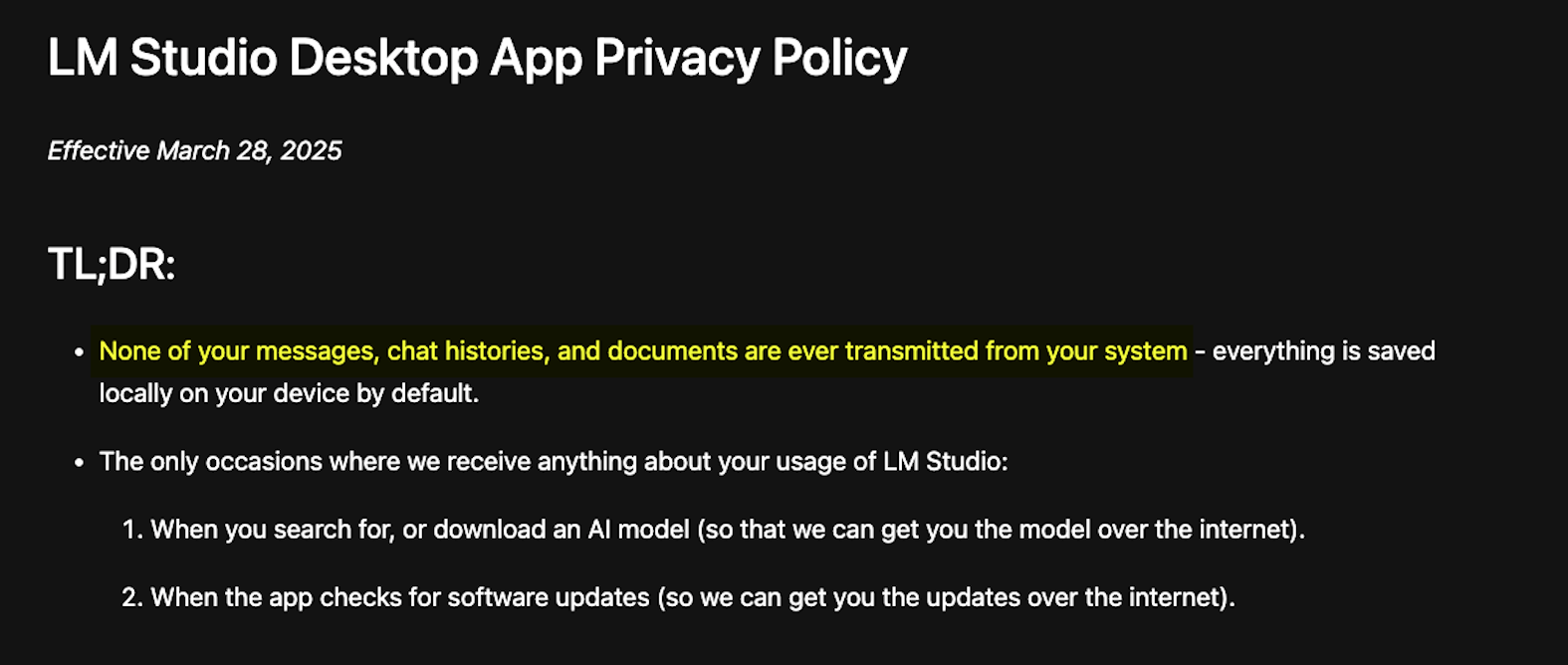

3. Improved Privacy: Cloud-based AI companies like OpenAI, Anthropic, and Google can see every message you send to their tools and may include it in their training data.

By contrast, when running locally, your messages don't need to be sent over the internet, and those companies will never see them.

Note: be sure to check the privacy and data usage policies of any AI app before using it, particularly if you intend to use it for business. Above, you can see an excerpt of LM Studio’s data policies (screenshot taken Nov 17th, 2025).

4. Offline Access: Running AI locally is excellent for areas with spotty internet or for use while traveling, as it allows you to access AI tools without needing a strong, stable connection.

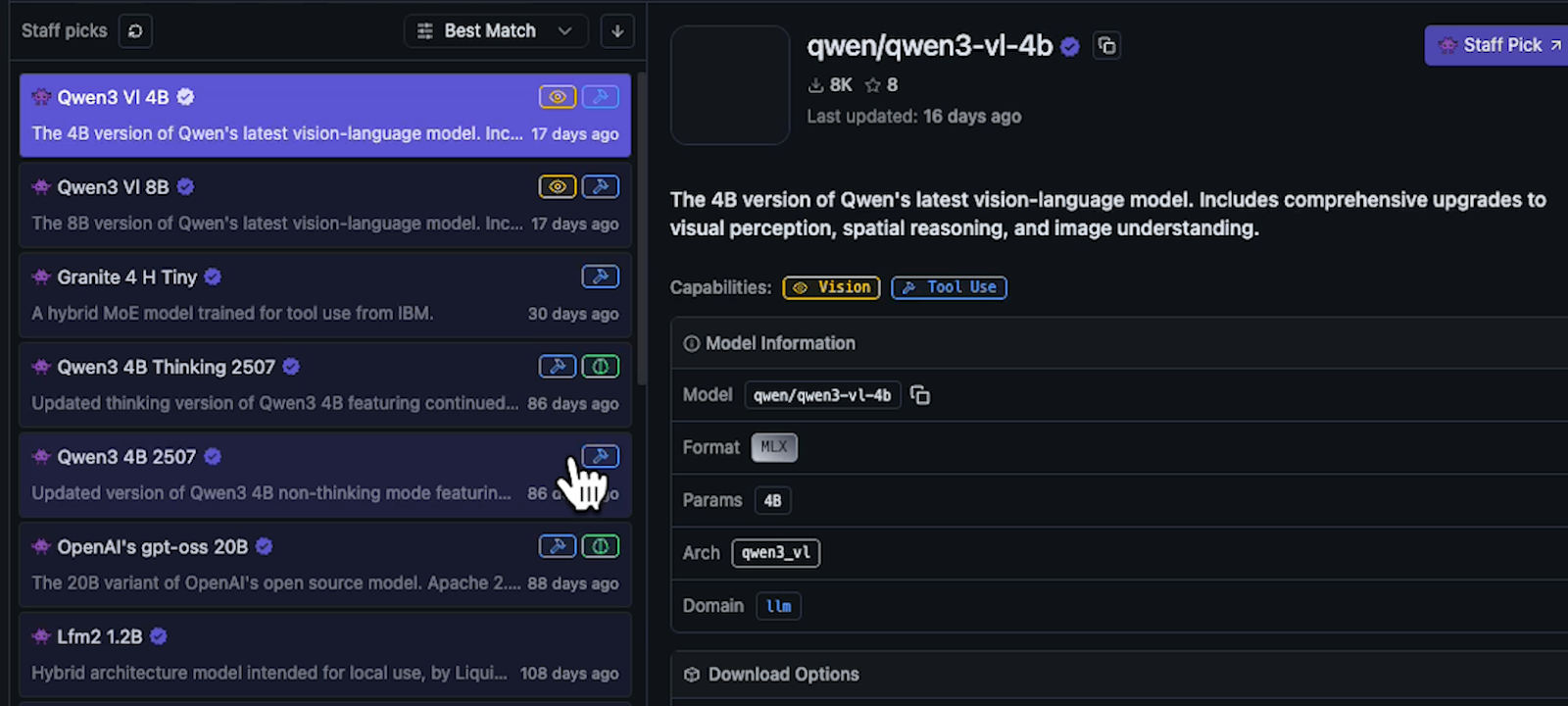

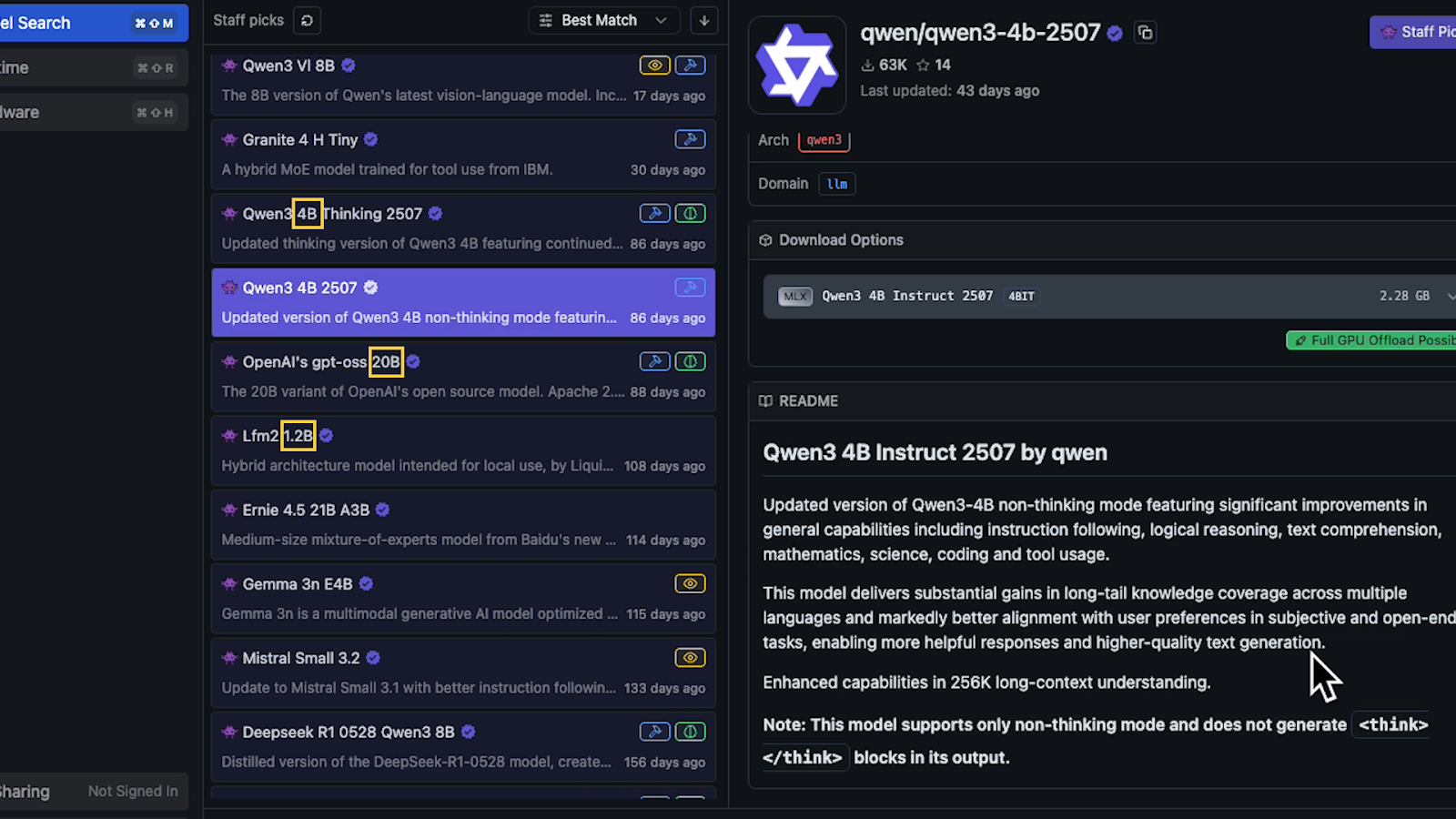

5. Choice of Model: You are not limited to models from a single company. You can choose from a wide range of models, including IBM Granite, open source versions of ChatGPT, Qwen, and others.

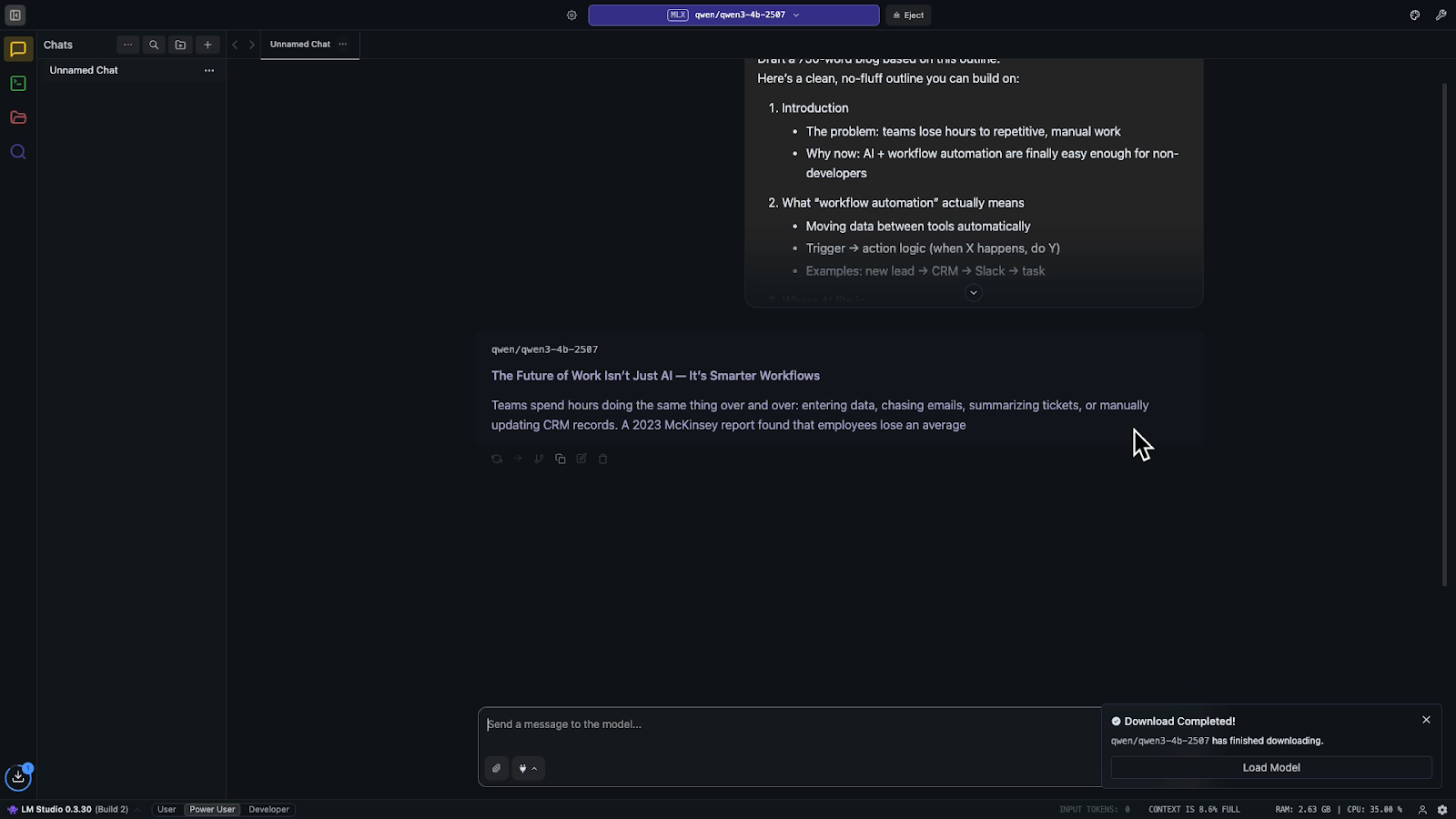

Below, you can see a few of the models available to download in LM Studio.

Many of these models are fine-tuned to excel at specific tasks, industries, and niche use cases.

While local AI offers many benefits, there are also some drawbacks:

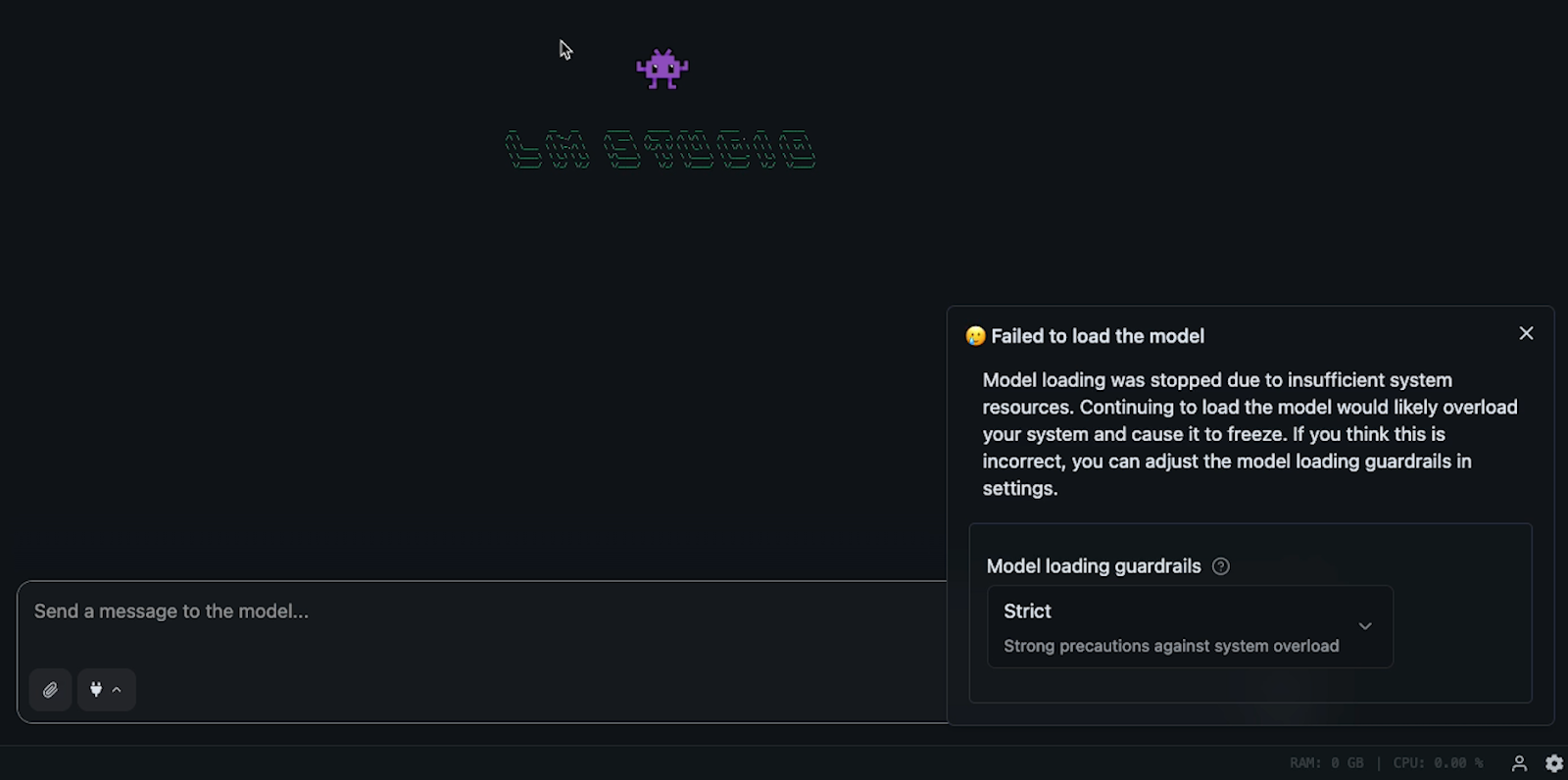

Resource-Intensive: Good AI models are large and require significant resources. Processing the billions of parameters in these models demands a lot of RAM and VRAM. Working with images and video is especially resource-intensive.

Hardware Limitations: If you are using a standard laptop, you may be limited in the models you can choose and operate effectively. You'll typically need to stick with smaller, simpler models with fewer parameters.

Less computing power also means slower answers from the AI. Average computers likely won’t offer the same lightning-fast speeds as ChatGPT, Claude, or Gemini.

No Access to Top Models: You won't get access to the absolute latest and best proprietary models from companies like OpenAI, Anthropic, or Google, as they do not give these away for free. You will need to use open source alternatives instead.

We encourage you not to let these drawbacks dissuade you. Since downloading these tools and trying them out is completely free, getting first-hand experience is the best way to decide if local AI fits into your workflows.

To see how running AI locally works in practice, we recommend starting with two free and easy-to-use apps:

LM Studio: Great for text-based work.

DiffusionBee: Good for generating images.

For generating AI video, we’d recommend trying out Comfy. However, getting started with Comfy is quite a bit more complicated, and we won’t be including a guide for it in this post.

However, we may create a standalone tutorial for Comfy in the future.

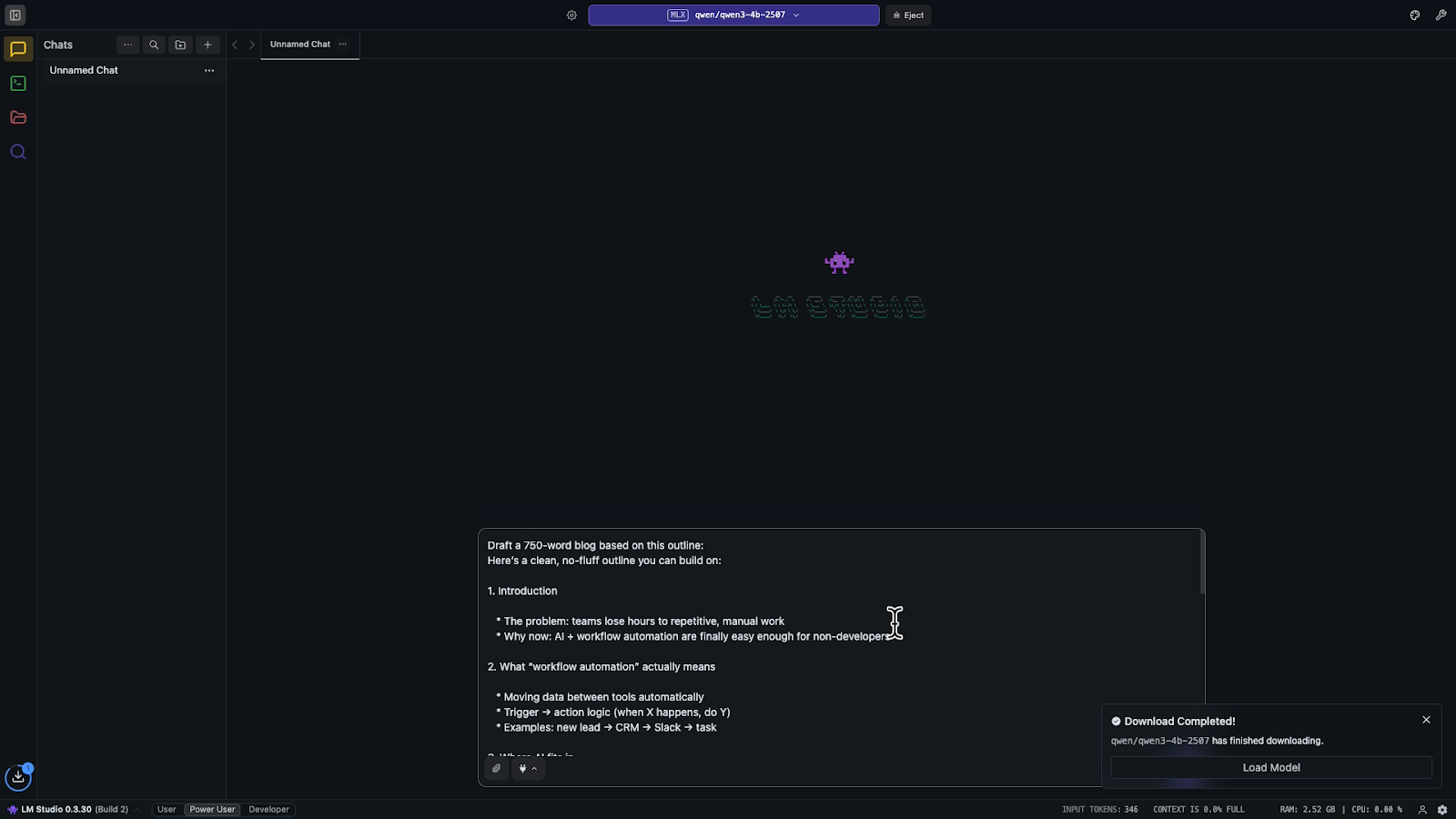

1. Download and Open: Download the app at lmstudio.ai and open it up. During initial setup, we recommend choosing "Power User Mode," as the "User" mode hides essential features and can be more confusing.

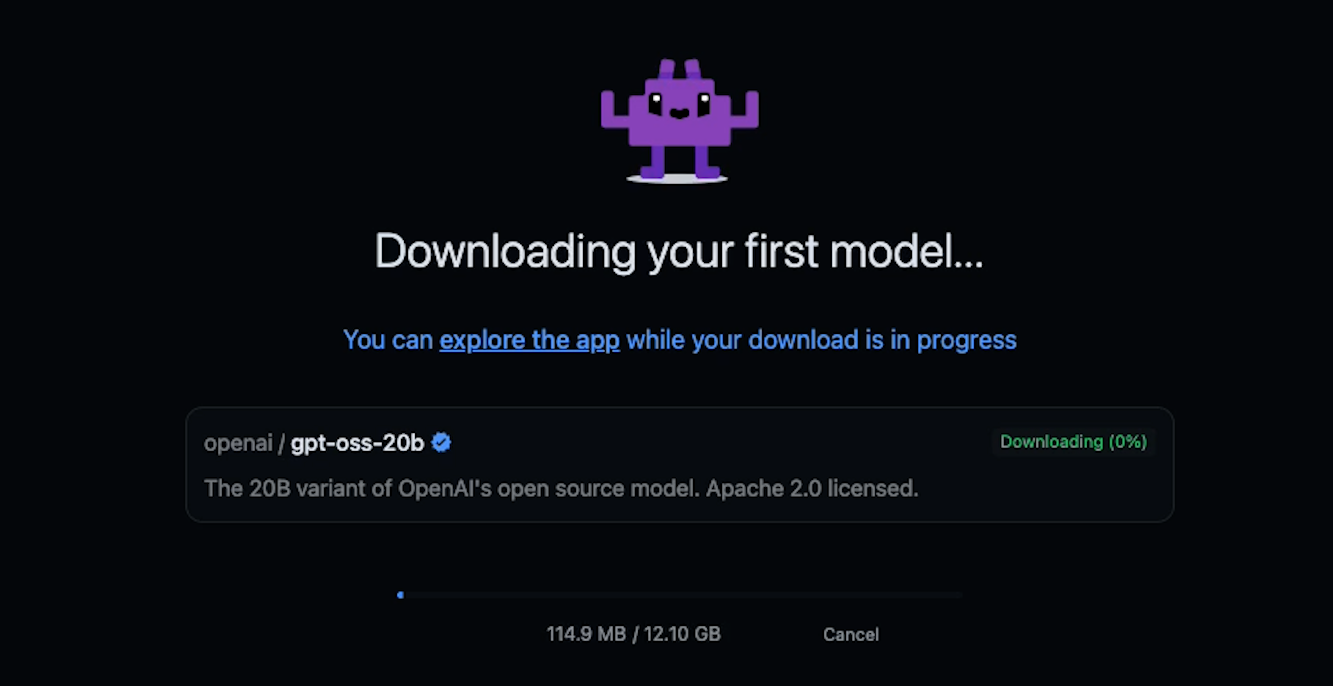

2. Get a Model: Once installed, you need an AI model to use. LM Studio will recommend a default model to use, such as gpt-oss-20b (an open-source model from OpenAI).

However, you should be aware that the default model may be too resource-intensive for your computer, especially if you have older hardware.

3. Choose a Lighter Model (Optional): If your computer is unable to run the model, you can download something lighter, like Qwen3 or IBM Granite 4 micro.

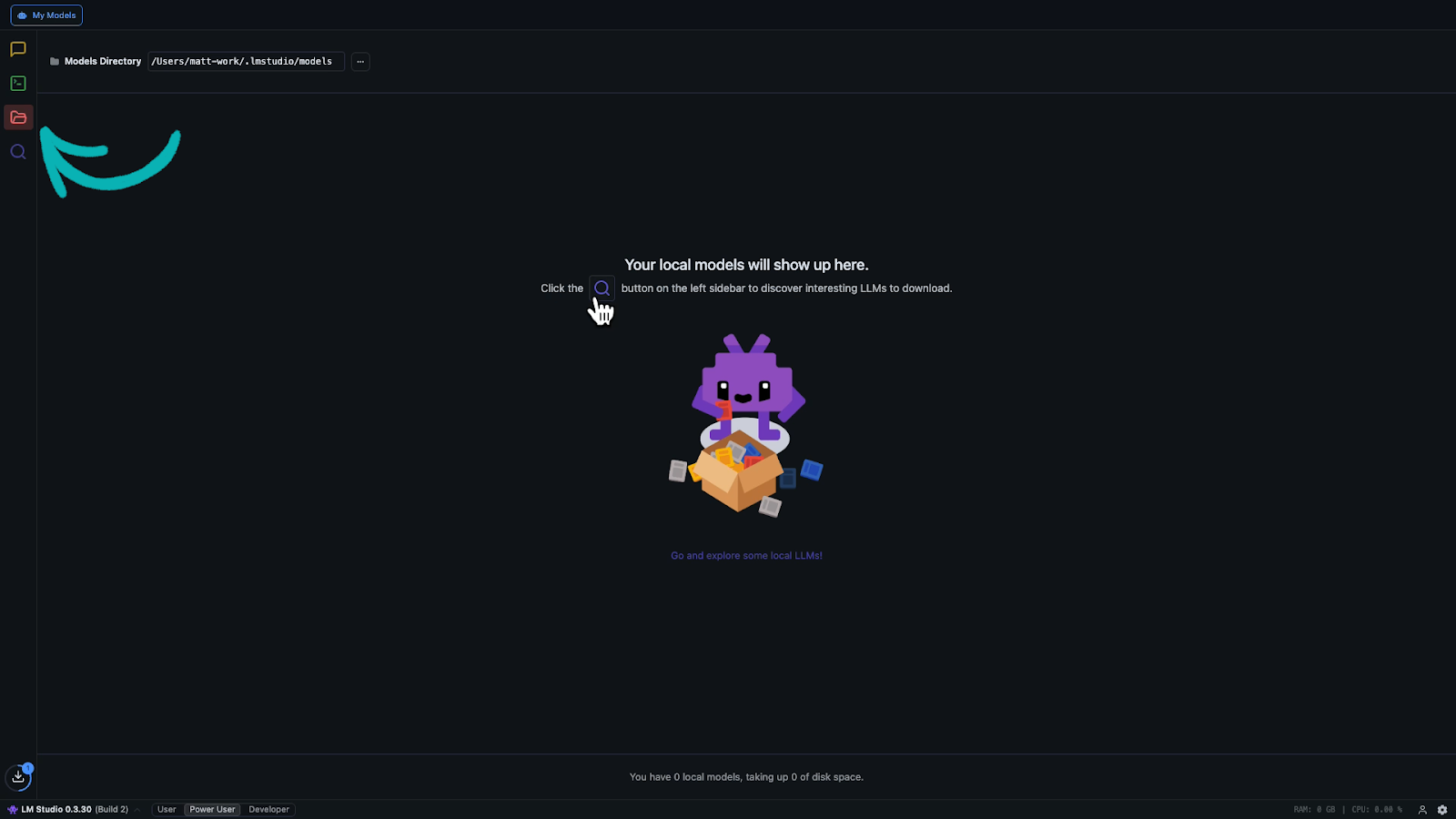

LM Studio lets you download models right from the app. Click on the Folder icon to access your installed models and search for new ones.

Models are tagged with "B" (e.g., 4B, 20B), which refers to billions of parameters. More parameters usually means better performance but requires higher resources. For lower-powered systems, go with a lower parameter count.

Tip: You can visit livebench.ai to review performance benchmarks for any AI model.

4. Chat: After downloading, go to the Chat tab and load your model using the dropdown.

You can now send prompts just like with ChatGPT or Claude.

The speed will depend on your hardware, but you can use the AI as much as you’d like with no subscription fees and no usage limits.

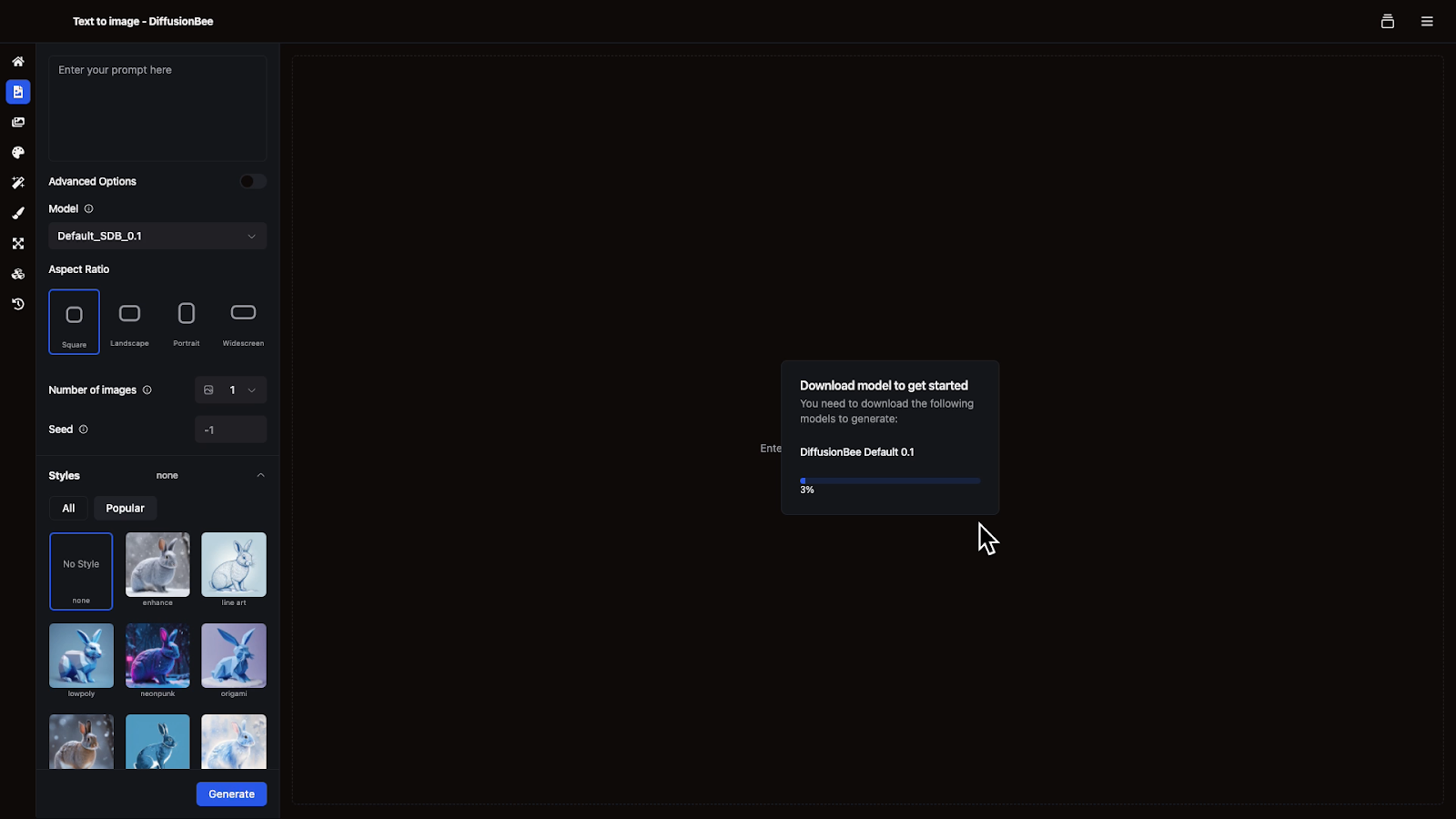

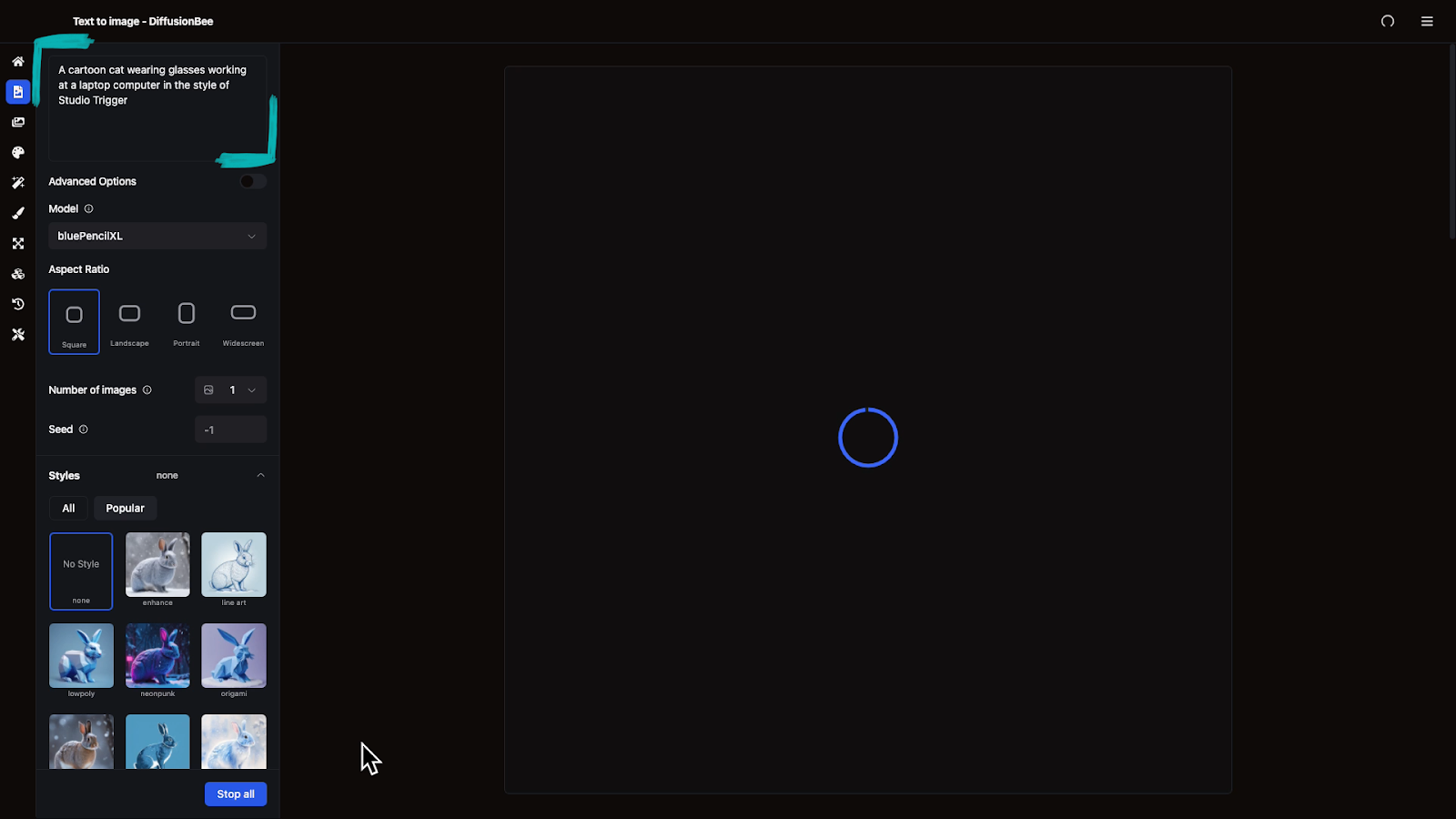

The DiffusionBee setup is just as simple as LM Studio:

1. Download and Open: Download the app at diffusionbee.com and open it up.

2. Select a Model: DiffusionBee provides a default model you can use, but its output is very underwhelming.

Click on “Models” (stacked cubes icon) in the lefthand menu, and download the model of your choice.

For example, you might choose Blue PencilXL for anime or illustration.

3. Generate: Select “Text to image” (document icon). Compose a prompt, adjust the settings as desired, and send your request.

The generation may take a little while, but you should get your image within a minute.

With DiffusionBee, you can regenerate as many images as you want with no cost or usage limits.

Cloud-based AI is incredibly convenient, and it has become one of the biggest industries in the world. However, if you're looking for a free alternative with no usage limits and better privacy, you should give local AI tools a try. Download apps like LM Studio and DiffusionBee to get started.

—

At XRAY, our first priority is cleaning up your most critical workflows to produce the results you need. Then, we use tools like automation and AI to make everything faster.

Click here to learn more and book a free call.

We offer a long-term retainer for total workflow transformation, as well as flexible hourly support for smaller projects.